Autonomy is not intelligence: why the future of unmanned systems must remain human

Sponsored by Quantum Systems

In the midst of Russia’s war against Ukraine, one idea has gained remarkable traction: that fully autonomous drones represent the future of defence. Fewer humans, more machines, faster outcomes. Autonomy, in this narrative, is treated as a proxy for progress and often even for intelligence.

This is a dangerous misconception.

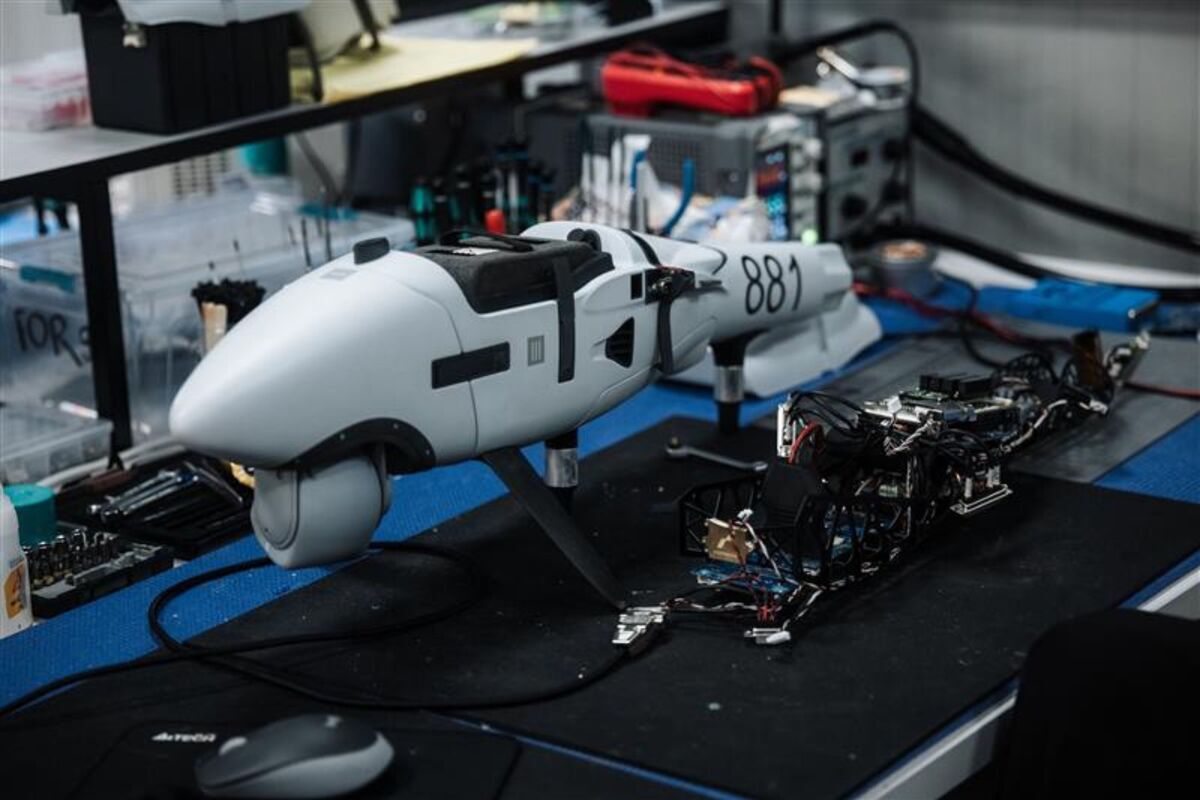

Ukraine’s ongoing resistance against Russia has shown the world, in the starkest possible terms, how profoundly modern warfare has changed. Large, expensive and slow-to-adapt systems are no longer the decisive factor. Instead, smaller, software-defined unmanned systems dominate the battlefield because they are fast to adapt, cost-efficient and integrated into a broader information ecosystem.

What we’ve learned from Ukraine is that what matters most is not whether a system can operate without human input for as long as possible. What matters is whether it can help humans see, understand and decide faster than their opponent.

The reality of the modern battlefield

Nowhere else has it become so clear how unforgiving real-world conditions are for technology. Systems operate in contested and unpredictable environments. GPS signals disappear. Communications are disrupted. Data is incomplete, outdated or contradictory. These are no longer edge cases; they are the baseline.

Fully autonomous systems may perform impressively in controlled settings, but in reality, when circumstances shift unexpectedly, the risk of failure increases sharply. Intelligence is not about operating in a vacuum but handling ambiguity, context and uncertainty – areas where humans remain essential.

This is why autonomy is so often mistaken for intelligence. We assume that removing humans from the loop automatically makes a system more advanced. In reality, it often removes the very element that allows systems to cope with complexity.

What AI is actually good at

Artificial intelligence has become indispensable in modern unmanned systems, not because it replaces human judgment but because it addresses a very practical problem: cognitive overload.

Modern conflicts generate enormous amounts of information: video feeds, sensor data, maps, alerts and signals arriving simultaneously. No human can process all of this in real time. AI excels at filtering noise, prioritising relevant signals, detecting patterns and preparing information for decision-makers.

In this sense, AI’s most meaningful role today is supportive. It shortens the path from observation to understanding, reduces the likelihood of human error in high-pressure situations and enables faster, better-informed decisions. The final responsibility, however, remains in human hands.

Meaningful human control is not a brake on innovation

New technologies are transforming warfare while raising uncomfortable moral questions. Who is responsible when machines make life-or-death decisions? How do we ensure compliance with international law? How do democratic societies compete with adversaries who ignore ethical limits altogether?

The answer cannot be to stand still. Speed matters, and those who disregard rules will not pause for ethical debate. Equally, the response of democratic societies cannot be to abdicate responsibility.

Meaningful human control remains crucial. This does not mean humans must manually operate every function – automation handles speed, repetition and data processing. Humans provide context, judgment and ethical responsibility when needed, especially in complex or ambiguous situations. We must continue to invest and drive innovation – maintaining a technological edge means that this moral imperative can remain our strength, not a disadvantage on the battlefield.

Autonomy in weapons systems is not new – from automated air defence systems to fire-and-forget missiles. What AI does is accelerate and expand existing forms of automation. The decisive factor is therefore not whether autonomy exists, but how, under what rules and with what transparency oversight is ensured when systems encounter ambiguous circumstances.

Regulation must keep pace with reality

Regulation in defence technology is essential. Clear red lines must be drawn where ethics, responsible use and international law are concerned. At the same time, regulatory frameworks must be fast and adaptive enough to reflect today’s security realities.

Throughout Europe, clearer and more agile rules are needed to enable responsible innovation rather than stifling it. If regulation lags too far behind technological and geopolitical developments, democratic states risk losing the ability to protect their people, their sovereignty and their values.

This debate matters not only for Ukraine, but for Europe’s own security – from the Baltic states sitting on NATO’s Eastern flank to critical infrastructure protection at home.

The future will be unmanned – but not unhuman

Some argue that AI will inevitably make wars faster and more dangerous. The truth is more nuanced. AI can also reduce mistakes, improve situational awareness and help protect lives, if used responsibly.

Progress will not be defined by removing humans completely from the equation. It will be defined by how well systems support people under pressure, how transparently they communicate their limits and how firmly responsibility remains with human decision-makers.

The future of defence will indeed be increasingly unmanned. But intelligence is not measured by autonomy alone. And if we get this wrong, we risk building systems that are fast, but blind – powerful, but clueless in complex situations.

For democratic societies, that is a risk we cannot afford.

By Vito Tomasi, Managing Director, Quantum Systems UK

Business Reporter Team

Most Viewed

Winston House, 3rd Floor, Units 306-309, 2-4 Dollis Park, London, N3 1HF

23-29 Hendon Lane, London, N3 1RT

020 8349 4363

© 2025, Lyonsdown Limited. Business Reporter® is a registered trademark of Lyonsdown Ltd. VAT registration number: 830519543